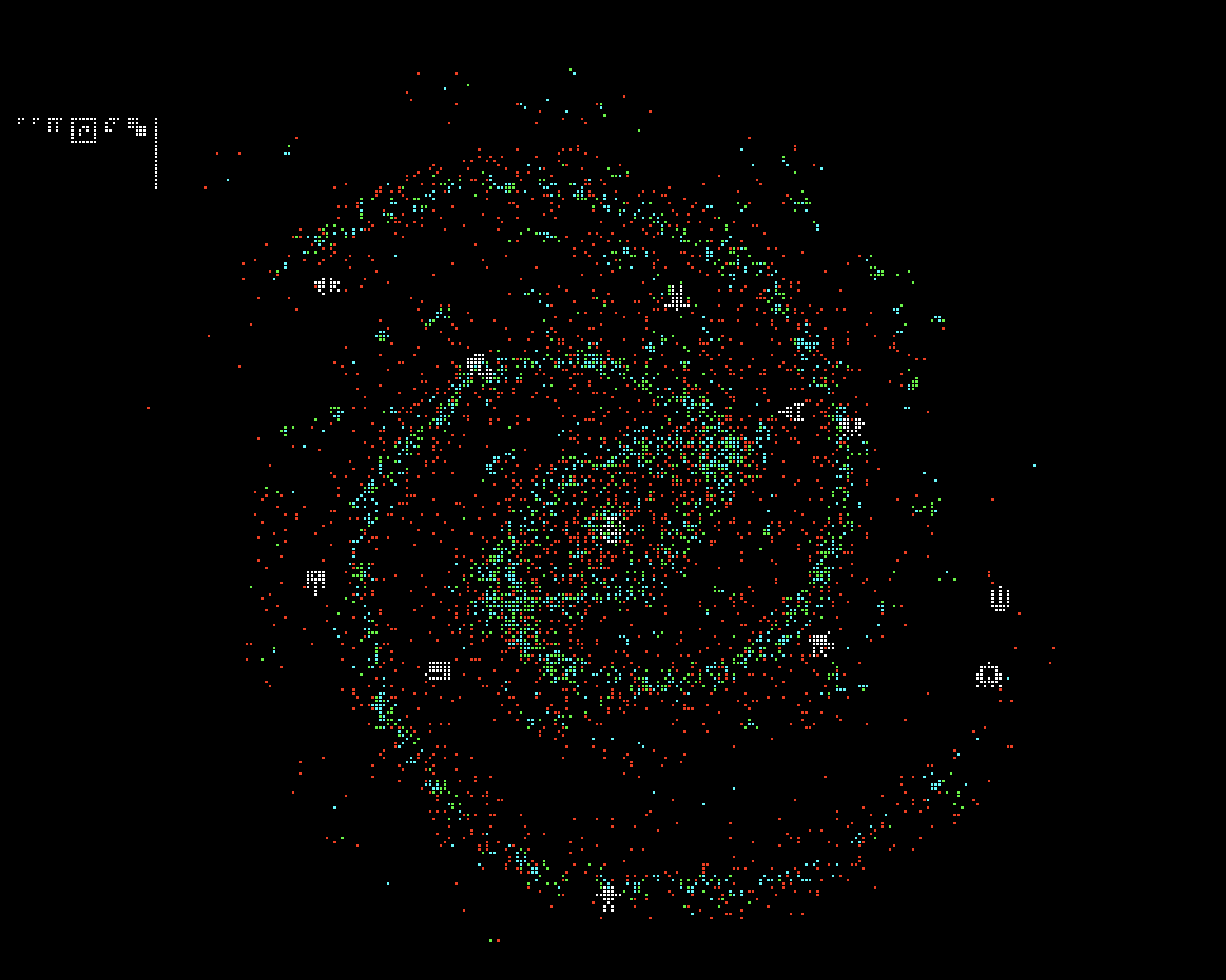

ICFPC 2020: galaxy.txt

This year was about running the obfuscated program from galaxy.txt and

then writing bots to compete against each other in a space battle.

This was my 11-th year participating in ICFPC. Below are some of my random thoughts and screenshots.

General Impression

Good

- Great storyline and teasers before contest

- Videos from crazy Russian scientists were quite heart-warming

- Contest infrastructure was extremely good

- time to prepare and test submission well advance before contest

- docker submissions work seamlessly

- UI to see your submissions and release new versions

- Vizualizations of battles

- Online leaderboard to compete with other teams

- Well thought scoring system

- True rank guarantees that there is no advantage in submitting earlier in stage, so you can focus on the stage itself without unnecessary rush

- Scoring in stages encourages early submissions of good strategies, so teams dont wait until last minute to reveal their best strategy

- On the other hand stage scores dont define everything and as long as you get to top20 the final results are solely determined by final round.

Not so good

- Pre-contest teasers were too tied to the main task

- Folks who got faimiliar with grid-encoded integers and other glyphs were at advantage when navigating galaxy UI

- Collaborative task description

- This broke the expectations about the format and many people (myself included) were unhappy about that.

- Even after galaxy.txt was released there was no clear understanding what pieces of information scattered around is relevant what’s not.

- I think it would’ve been much better to abandon the pre-contest “its aliens communicating with us” storyline once contest starts and just release the full pdf with all the rules. It’s okay to have aliens legend there, just dont be cryptic there. Also being very explicit about unknonws would be a good thing as well: “hey, game mechanics is unknown, so you need to reverse engineer it using clues from galaxy UI”.

- Trying to put too many different themes into contest

- Overall this isn’t a bad thing, but I think the main issue is that the basic task of running galaxy.txt was too hard without any intermediate steps in between to guide your progress (results of lightning round that only a couple of teams were able to do that is a great confirmation)

- Our team was only able to have working galaxy 12 before the end of contest and we were already very tired from struggling to make it work so simply didnt have any energy left to reverse-engineer game mechanics, build AI bot, adapt to metagame, etc..

- Puzzle nature of the task

- This one is interesting: in the past I really enjoyed solving 2006 and 2007 years which had similar nature and was wondering why more recent icfpcs never follow the pattern. But when given such task myself in 2020 I had mixed feelings. Why?

- My best explanation is that what works great for no-rush offline exploration is not very suitable for 72-hour contest. The puzzle usually allow only 1 correct answer and either you guessed it or not. This doesnt leave much room for creativity and competetiveness which is the most fun.

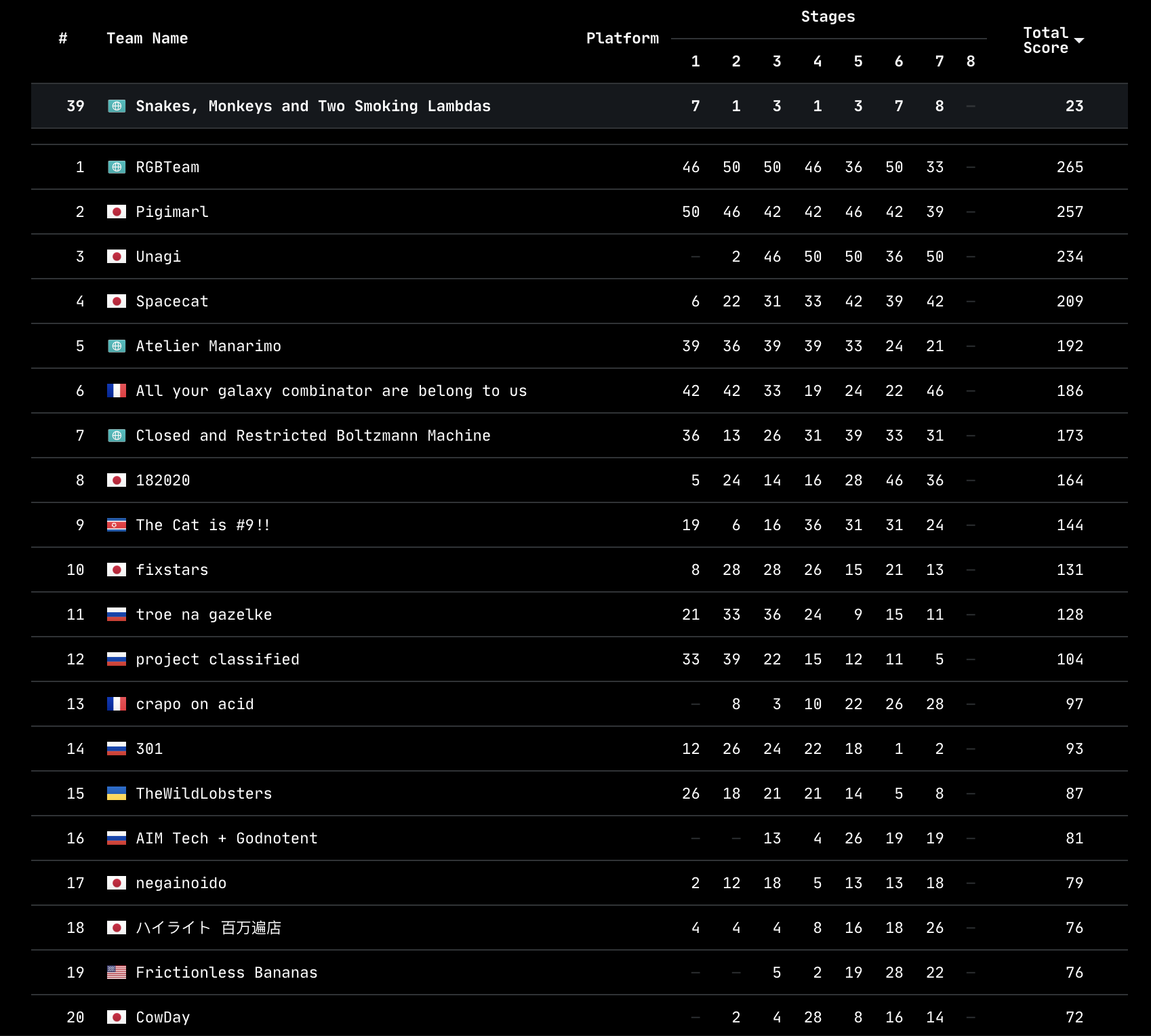

Final Results

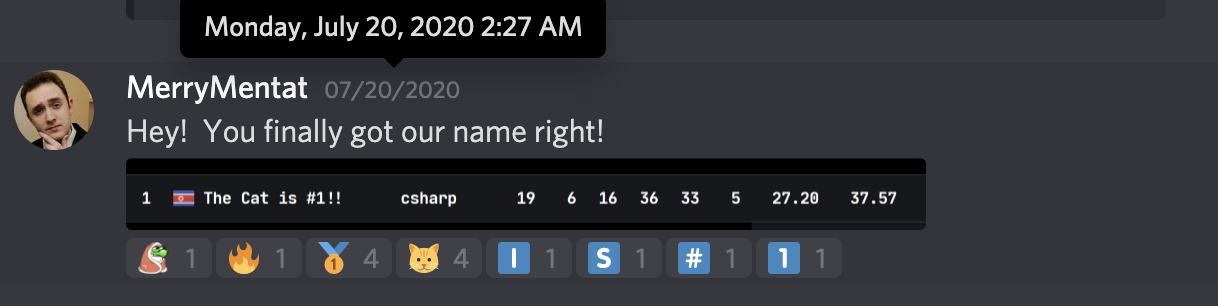

Our team finished at 40th place:

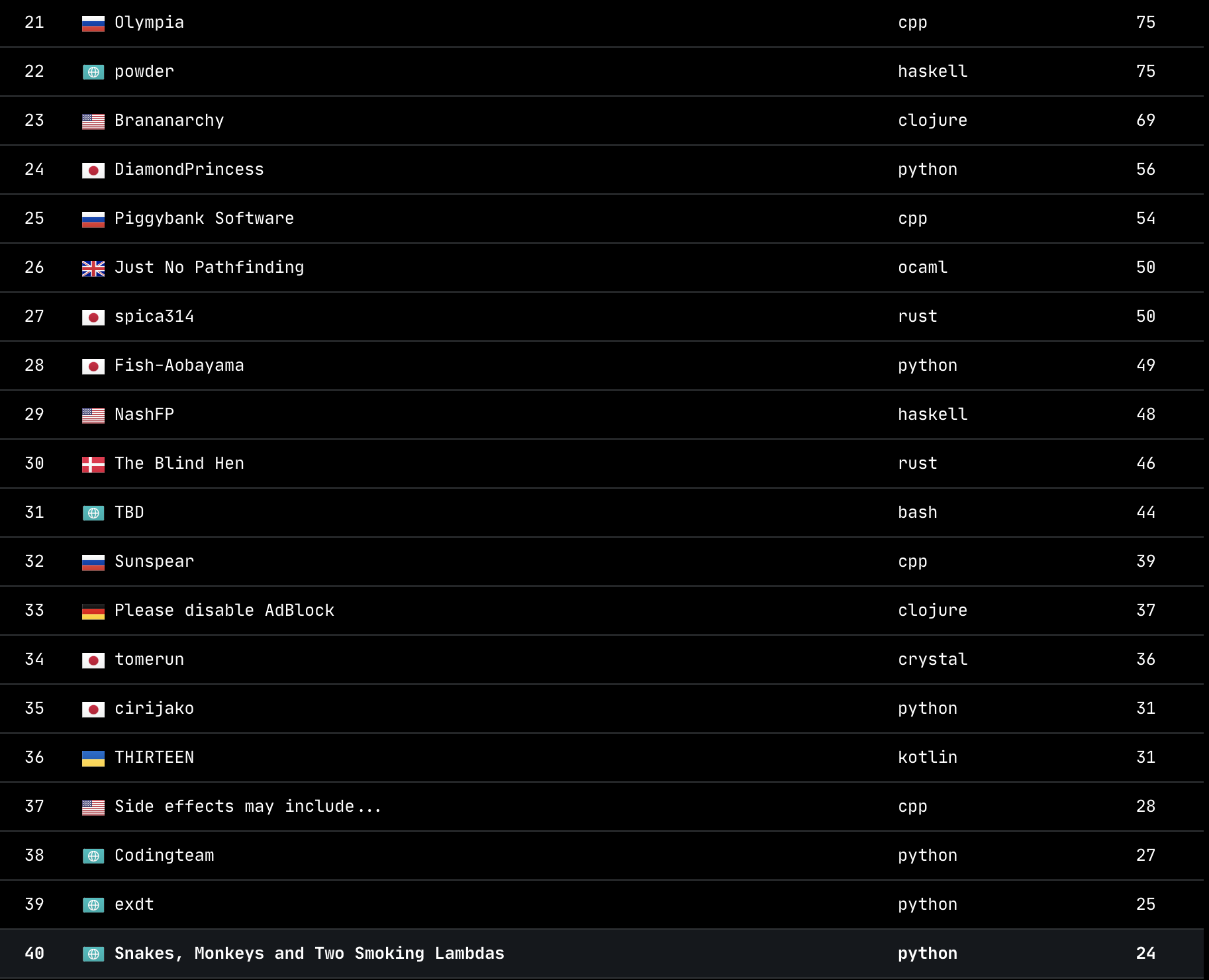

Exact top20 is still unknown, but here is the top20 from the frozen scoreboard:

Below goes more details about the timeline.

Before contest

T – (2 weeks)

Around 2 weeks before contest, ICFPC organizers retweeted a video from astronomer Ivan Zaitsev who received some weird signals from space and needs help from community in decoding them.

The message was provided as .wav file and after collaborative efforts in discord figured out the following sequence of actions to decode it.

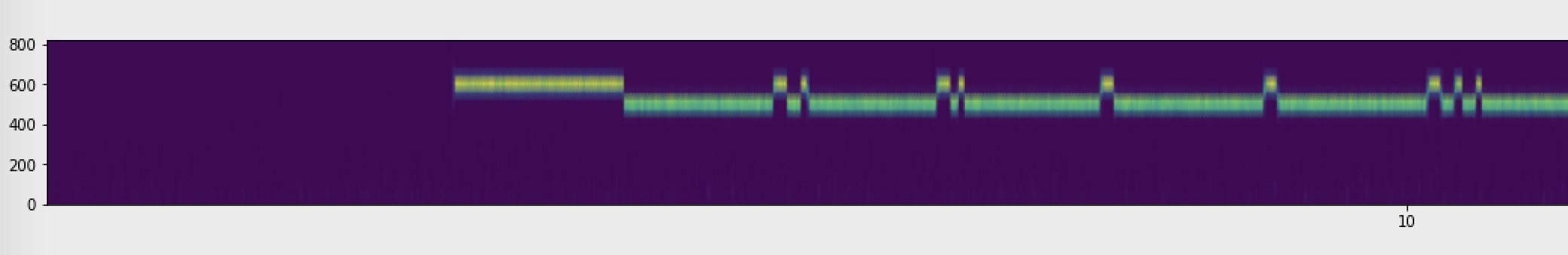

- Get the spectrogram of audio

- Convert low and high frequency spans into black and white squares respectively.

- Rearrange these squares into 2d grid instead of a line

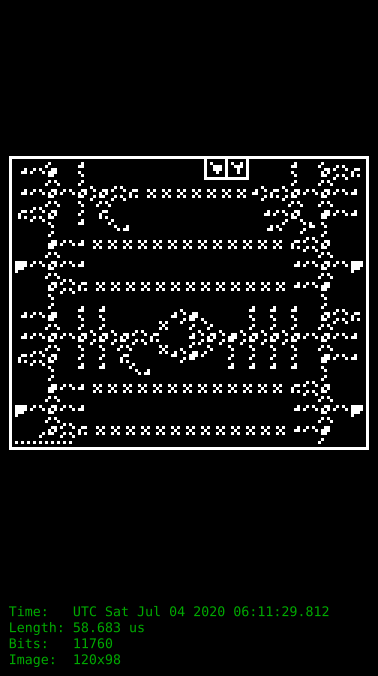

This is how first 10 seconds of spectrogram look like:

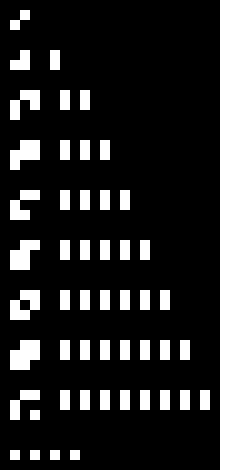

And this is the decoded message:

Of course it was obvious that this is traditional teasers about the theme of the upcoming contest, but nevertheless it was quite fun to maintain the legend and discuss the messages as if they were coming from real aliens.

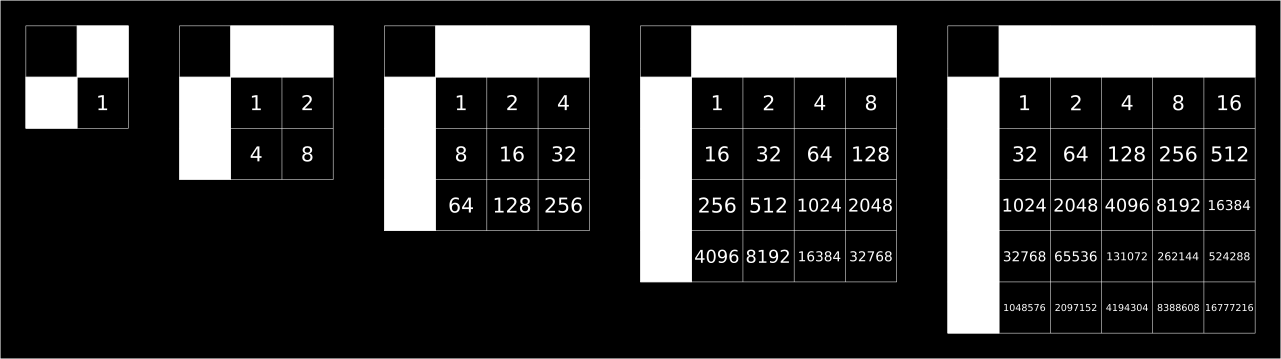

As was quickly deducted, the glyphs on the left represent integers.

Turns out transmissions started to repeat every day and now were kindly provided as images so there was no need to do any audio processing.

Further transmissions confirmed the hypothesis about the formula for integers. Positions represent bits and white square means that the bit was set:

T – (1 week)

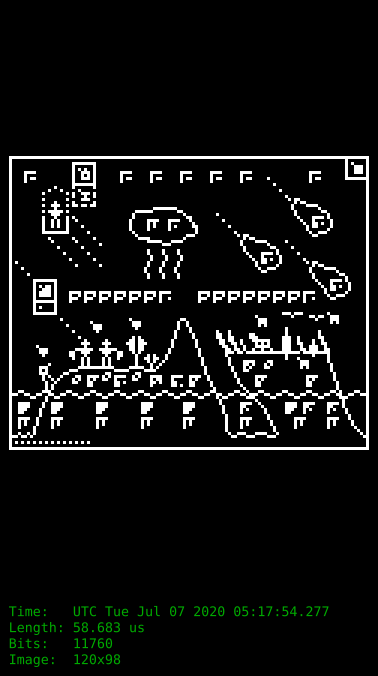

There was coming more message: 1 every day.

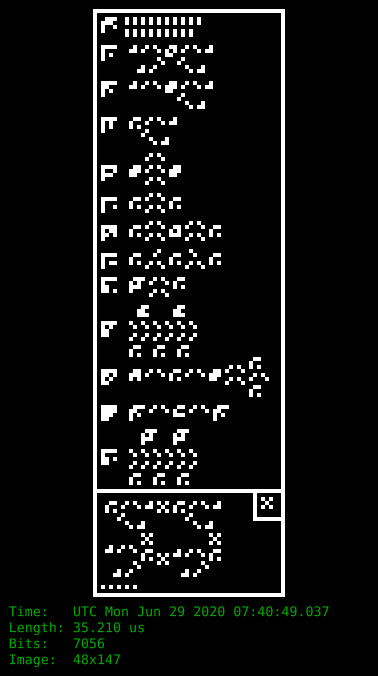

The fun part there was prank from another observatory getting message from space and inviting to decipher those. Some examples:

Day 1

T – (30 minutes)

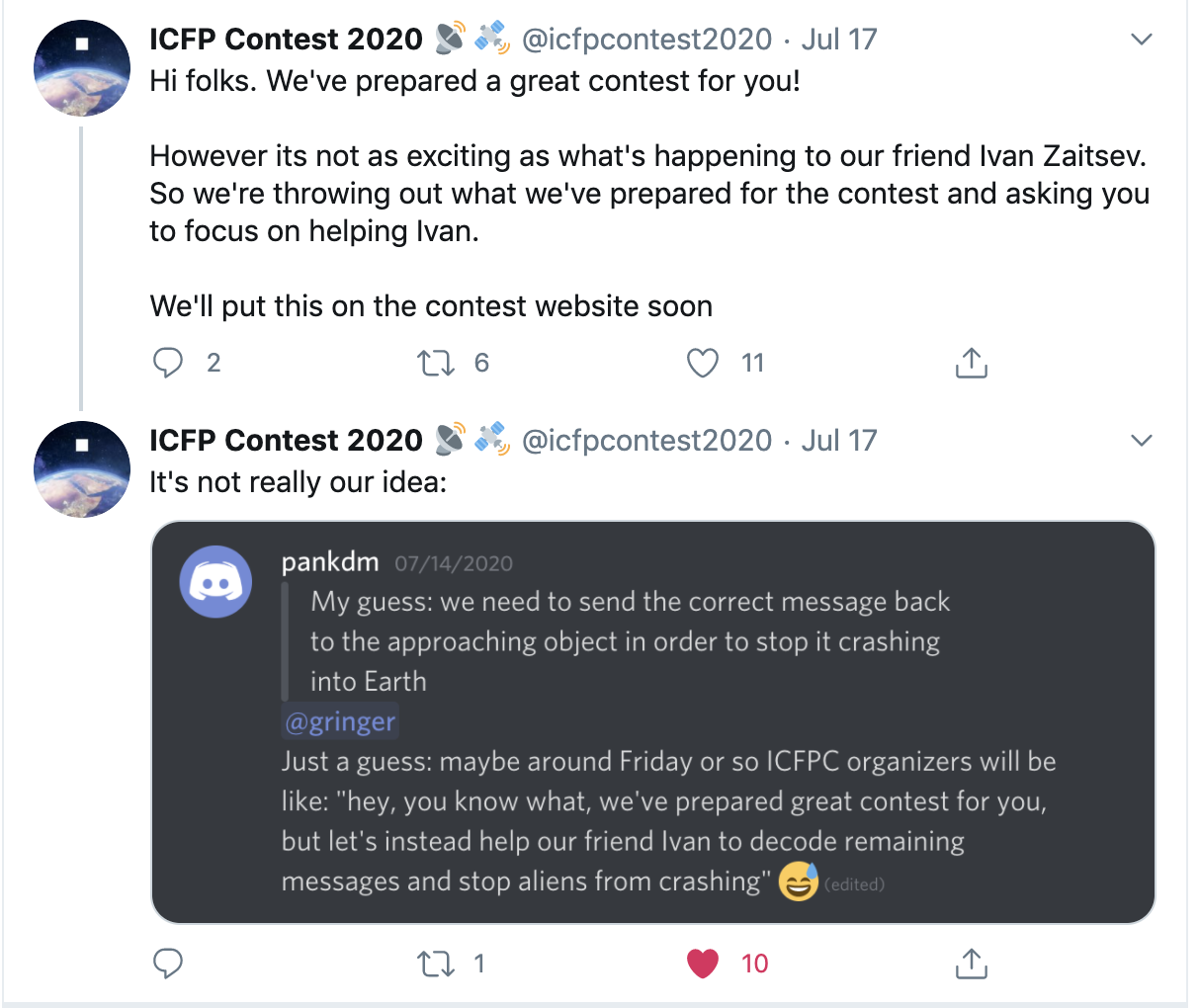

Organizer officially announced that contest would be a continuation of pegovka investigation. That’s also where my joke was quoted to be the source of this idea:

T + (0 hours)

I woke up just a little before 6am local time to start reading task description. Nothing was there yet. Maybe 10-15 minutes later there were more messages. But there was literally nothing else! Only new messages up to 42th provided as images and a suggestion for participants to decode those first:

Okay, I thought maybe let’s try helping there. I took my rust annotator and run all messages through it and pushed to our repo to make it more readable than raw messages. Suggested a few interpretations, but overall chat collectively was moving faster than I could help, so switched to strategy of waiting.

T + (1.5 hours)

An offcial statement from organizers that the contest won’t move forward until all glyphs are decoded:

Our friend Ivan Zaitsev says that a new message which appears to be REALLY HUGE is being received right now. We don’t really know if we’ll be able to render this message as an image. In this case, we’ll post this message as text. That is why it is very important that you give names to unknown symbols in the messages.

Not much was happening since then:

- More glyphs were decoded

- Ivan Zaitsev was able to send something to aliens

- Ivan Zaitsev received a sequence of 0 and 1 in response

- Organizers put a proxy where everyone could send their requests

- We tried sending some requests through proxy and found only 2 patterns:

- Sending

[0]gets[1, X]where X is the number of seconds before main round end - Sending anything else we tried gets

[0]

- Sending

T + (4.5 hours)

Finally galaxy.txt was released! That’s when we actually could start doing something meaningful!

We wrote a simple token parser and got unique lexems:

unique_lexems: {'cons', 'b', 'c', 't', 'ap', 's', 'mul', 'i', 'cdr', 'nil', 'eq', 'add', 'lt', 'car', 'div', 'neg', 'isnil'}

this means that we actually need to implement all the provided operation for galaxy to work.

We decided to write a very naive evaluator with the idea to start running it and see where it breaks. At that point it feelt like the main complexity will be in running the galaxy.txt program and just implementing the operators won’t be enough. There intentionally might be some very inefficient operations that would need to be spotted and implemented in more efficient terms (e.g. like writing optimizer for this calculus). For example the program might express the multiplication with integers using for-loop and addition.

T + (8 hours)

Organizers released a few short videos on the basics of tree evaluation. There were 3 main ideas:

- Representing expression as syntax tree

- Lazy evaluation

- S-combinator and caching the expressions

By that time we just figured out how to parse expression into syntax tree, so in some sense the videos were helpful in further understanding. But still they were too basic to give any concrete direction: lazy evaluation and memoization techniques were well known to us.

T + (15 hours)

By that time we implemented a first version of evaluator that was evaluating subtrees using python lambda functions and combining results. Something like that:

def evaluate(self, depth=0):

# ...

if self.name == "ap":

op0 = self.args[0]

op1 = self.args[1]

x0 = op0.evaluate(depth + 1)

x1 = op1.evaluate(depth + 1)

return x0(x1)

# ...

elif self.name == "c":

return lambda x: lambda y: lambda z: x(z)(y)

# ...

Obviously it wasn’t lazy enough so was failing with infite recursion on the program:

:2048 = ap f :2048

galaxy = ap :2048 42

We hacked a fix for that into "ap" definition:

if self.name == "ap":

op0 = self.args[0]

op1 = self.args[1]

if op0.name == "f":

return lambda y: y

x0 = op0.evaluate(depth + 1)

x1 = op1.evaluate(depth + 1)

return x0(x1)

But then the lazyness for t-combinator wasn’t obvious how to fix, since the expression would be evaluated as a separate subtree from the main "ap" application.

Another problem was with "eq" operator since both parts of the expression could be symbolic we wouldn’t have a way to compare them once they are represented as lambda functions.

The next idea was to build a better tool annotate.py to understand galaxy.txt and from the bottom-up start simplification of the program:

- Add parenthesis into original expression

- Add human-readable annotations for known expressions (integer, list, etc)

- Start gradually building the whitelist of already evaluated expressions

- For the next expression replace the ones from whitelist and a

- Repeat steps 2. - 4. until only recursive functions left

- Think more what to do next

T + (16 hours)

In parallel we were developing similar naive approach, but using our own CurriedFunction class instead of python lambdas that would give us better control and introspection over the galaxy execution. (e.g. it could tell how much arguments were already applied to operator)

We added a support for those into annotate.py and were able to get something like this in galaxy-annotated.txt:

:1248 = (ap neg 14)

-14

:1484 = (ap :1115 nil)

???

:1109 = (ap (ap cons 0) nil)

<CONS() (2 of 3 args applied)>

:1175 = (ap (ap c i) t)

<C() (2 of 3 args applied)>

T + (18 hours)

Got into realization that annotations approach is still too brute-forcy and won’t get us anywhere. Dropped the efforts here until the next day.

T + (22 hours)

Got more progress with CurredFunction approach. Got a better syntax for constructing syntax trees and were able to run simple galaxy_test program. Still not sure how eventually

Day 2

Now switching to local times as it’s easier to track, but the conversion rule is

T + 24 hours == 6am PST Day 2

6am PST

- Lightnnig round is over. The main goal was revealaed and this is fighting in AI battle

- Started implementing tree substituion approach for tree evaluation (3rd one total). Key observations compared to previous attemtps:

- There is no point in trying to evaluate the raw galaxy itself. In the end you would just get a partially implemented function which might not even be reduced further. The right approach is to evaluate galaxy run from (0, 0) and starting state:

galaxy( (0, 0), []) - We can start with left-most symbol, substitute it with its definition and continue further. This would give some incremental progress and indication of that in numbers of substitutions.

- There is no point in trying to evaluate the raw galaxy itself. In the end you would just get a partially implemented function which might not even be reduced further. The right approach is to evaluate galaxy run from (0, 0) and starting state:

11am PST

- Still fixing bugs in tree substitution

- Implementing UI and interactor protocol

13.30 PST

- Organizers released pseudo code

- Substituion approach still felt full of bugs so we decided to switch to it

16.30 PST

- Running galaxy after implementing pseudo code

To run the galaxy:

python3 src/ui.py galaxy.txt

- Some unsuccessfull clicking in galaxy

- Tweaks for UI

- Demodulated fixes

23.35 PST

- Performance optimizations for galaxy evaluator

- Undo in Galaxy UI

Day 3

6am PST

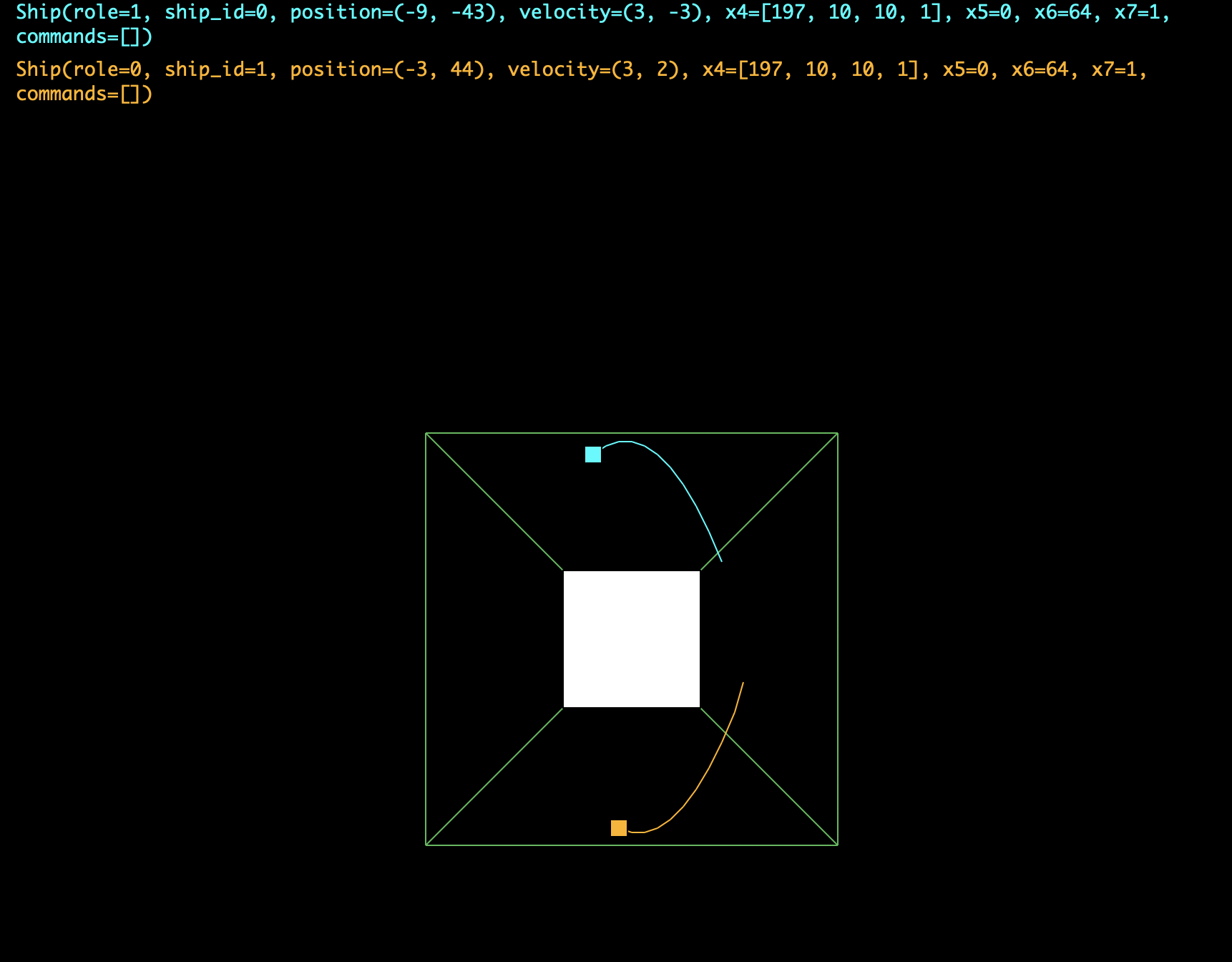

- Organizers released more details on game protocol + vizualizations of battles

7am PST

- Added layers toggle in UI

11:30am PST

- Implemented proxy evaluator

- Types for commands and parsing those

13:30 PST

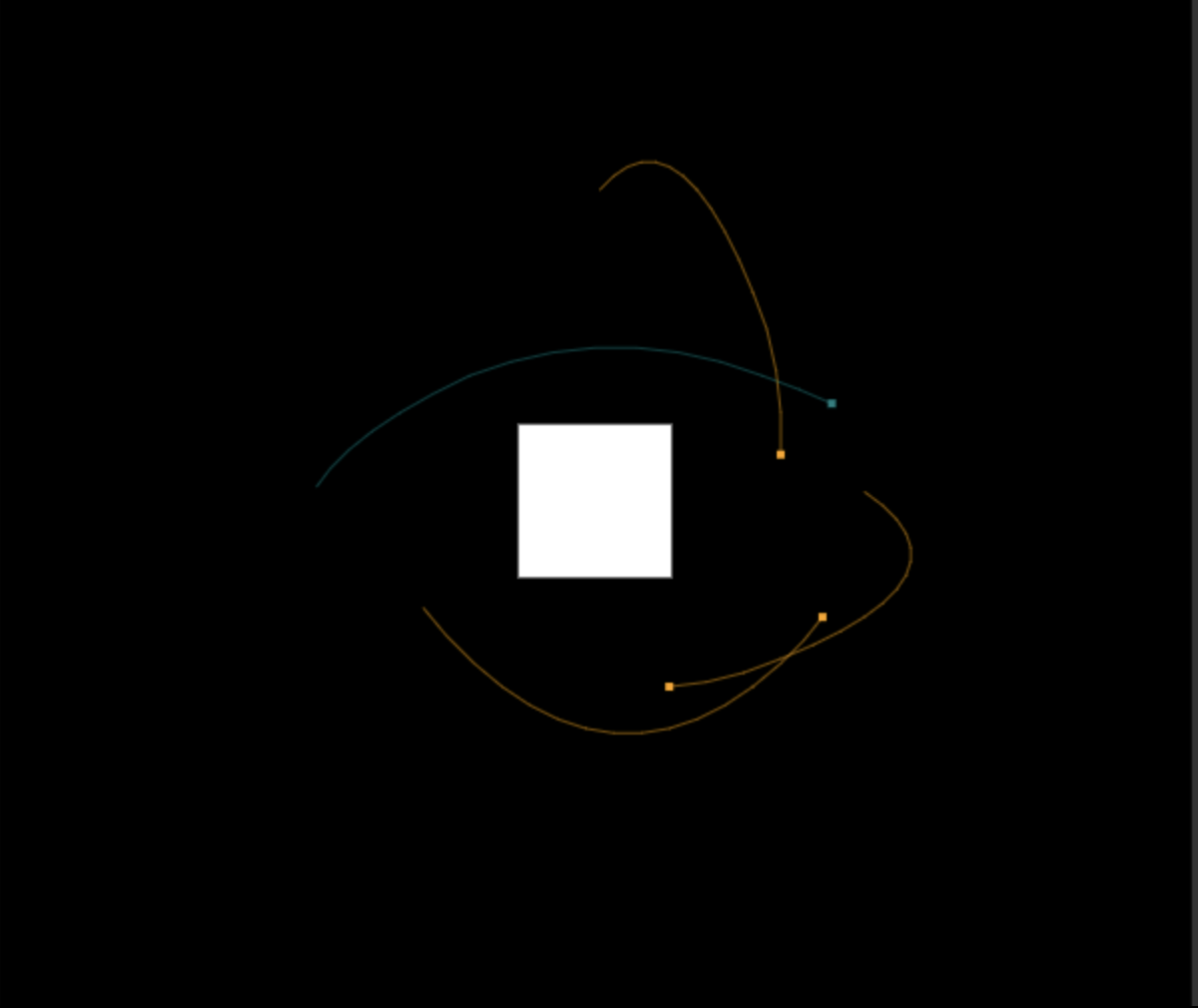

- First flying bot

14:15 PST

15:50 PST

- Simple UI for replay games

To run the UI:

python3 app/space_viz.py

- In parallel: reverse-engineering replays to understand game mechanics

- Giant refactoring of modulate/demodulate to fix inconsistencies

- Redo tutorials with logging and understanding how to apply shoot command

19:30 PST

- Fixing a bug in shooter bot to start actually shooting. Still often it doesn’t do any damage.

- Physics implementation for future trajectory viz. Later will be used for prediction in bots itself.

- Attempt to do a

ForkBotthat would fork to be harder to kill, but without proper orbiting it was kind of useless TrajectoryBot: brute-force the accelearate commands to find the trajectory that stays the most from the planet

22:00 PST

- Increased fuel for

FlyingBotto 200 to let it stay alive longer. Now getting more scores in leaderboard. - Shooting ahead with better logic for cooling.

- Tried to do memoization of orbits to follow. Offline for each position on the map pre-compute sequence of accelerations to not crash into the planet and use it as static table when running. Didn’t work for some reason.

- Some tweaks to

TrajectoryBotto actually start orbiting (mostly constants tuning)

Final submit:

bot = RoleSmartBot(attacker=ShooterBot(), defender=TrajectoryBot())

Essentially in both roles attacker and defender this was a TrajectoryBot that tried to stay as much as it could on the orbit.

In the attacker case it was shooting if the cooling was enough and could detonate itself if was within enough radius of the opposing ship and it was a single one.

Appendix: fun stuff from discord chat

- How little did we know about upcoming contest:

- Those who participated in ICPC will understand:

- Deciding what to send back to aliens:

- The storyline was strong with organizers:

- More jokes about the contest format:

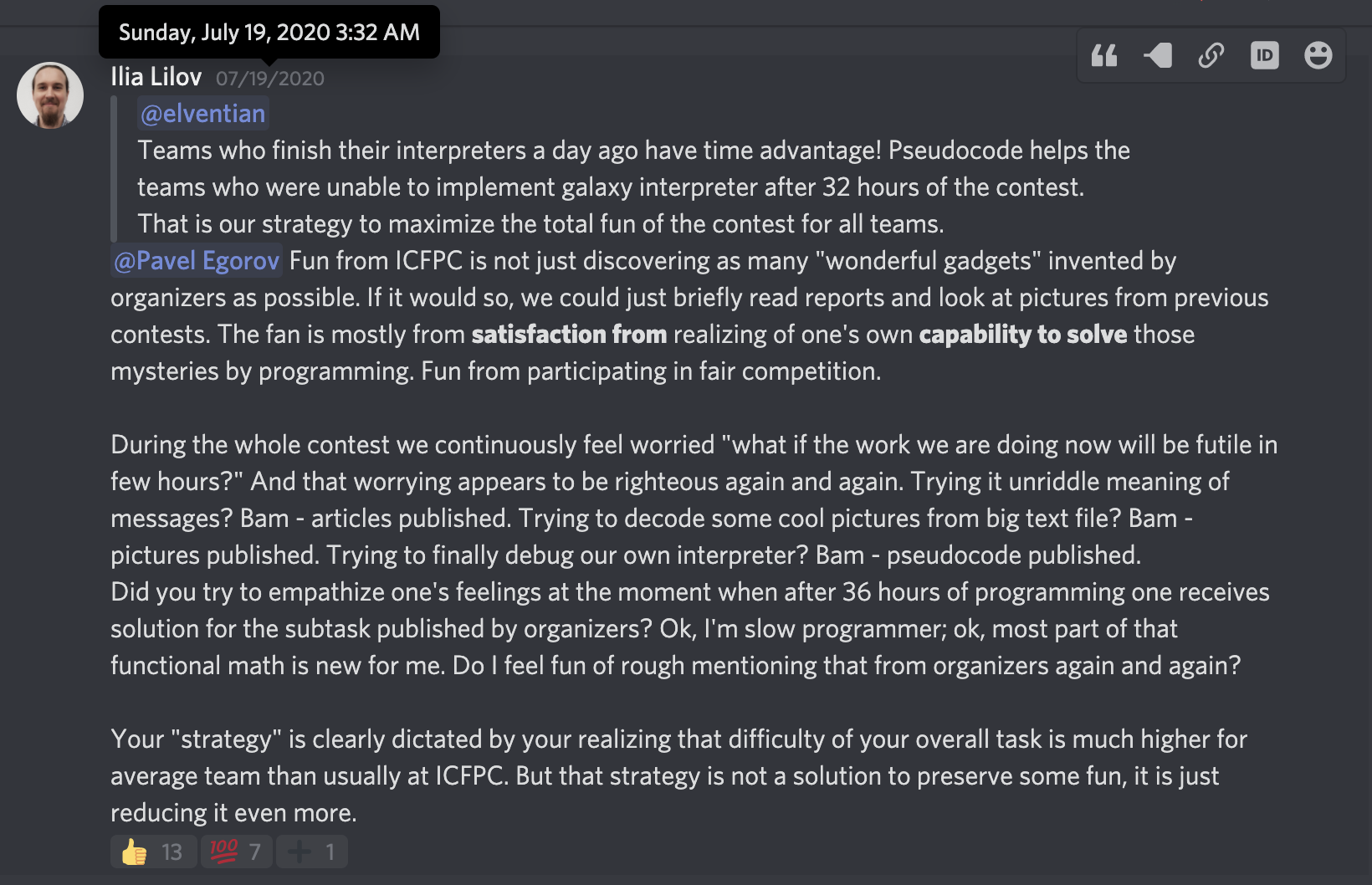

- This was really well said:

- Team

The Cat is #1!!was changing its name in scoreboard:

- and sometimes it was right: