HighLoad Cup 2018

Task description

The goal of the task was to implement a server listening to request by http and returning the correct answers as fast as possible. Here are the details of resource limitations:

The solution will be sent to a testing machine with an Intel Core i7 processor. The solution will be allocated 4 cores of 2.4 GHz, 2 GB of RAM and 10 GB of hard disk.

Results

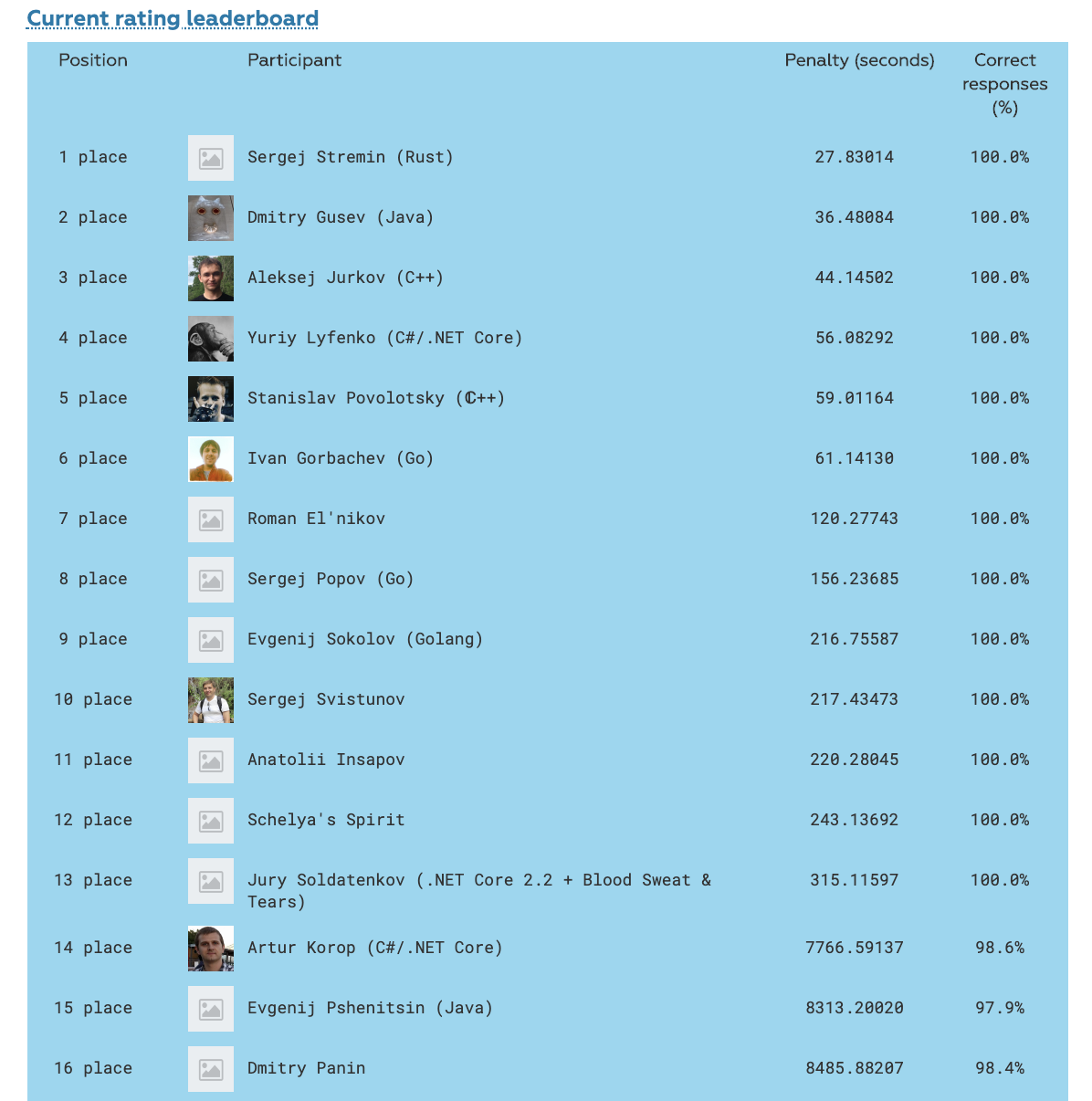

Main round

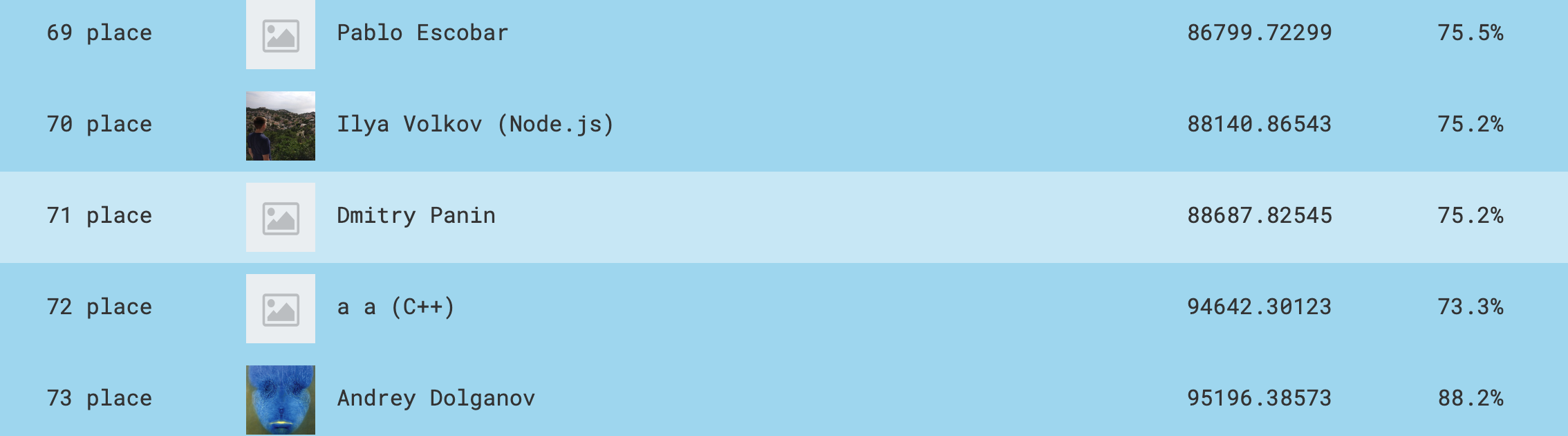

I’ve implemented solutions to 1st and 2nd parts and finished on 71st place with 75% of correct responses and ~88K seconds of penalty:

Sandbox

Afterwards I finished the implmementation for updates which increased by correct responses rate to 98.4% and ~8K seconds of penalty:

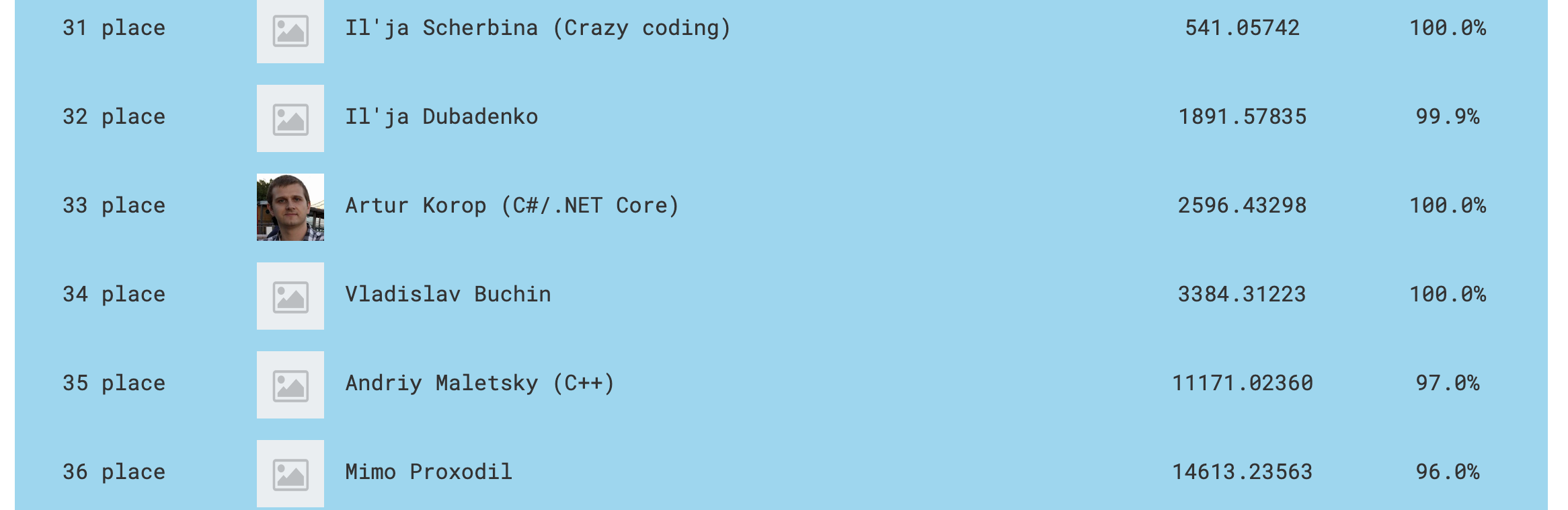

That would have put me on 35th place in main competition:

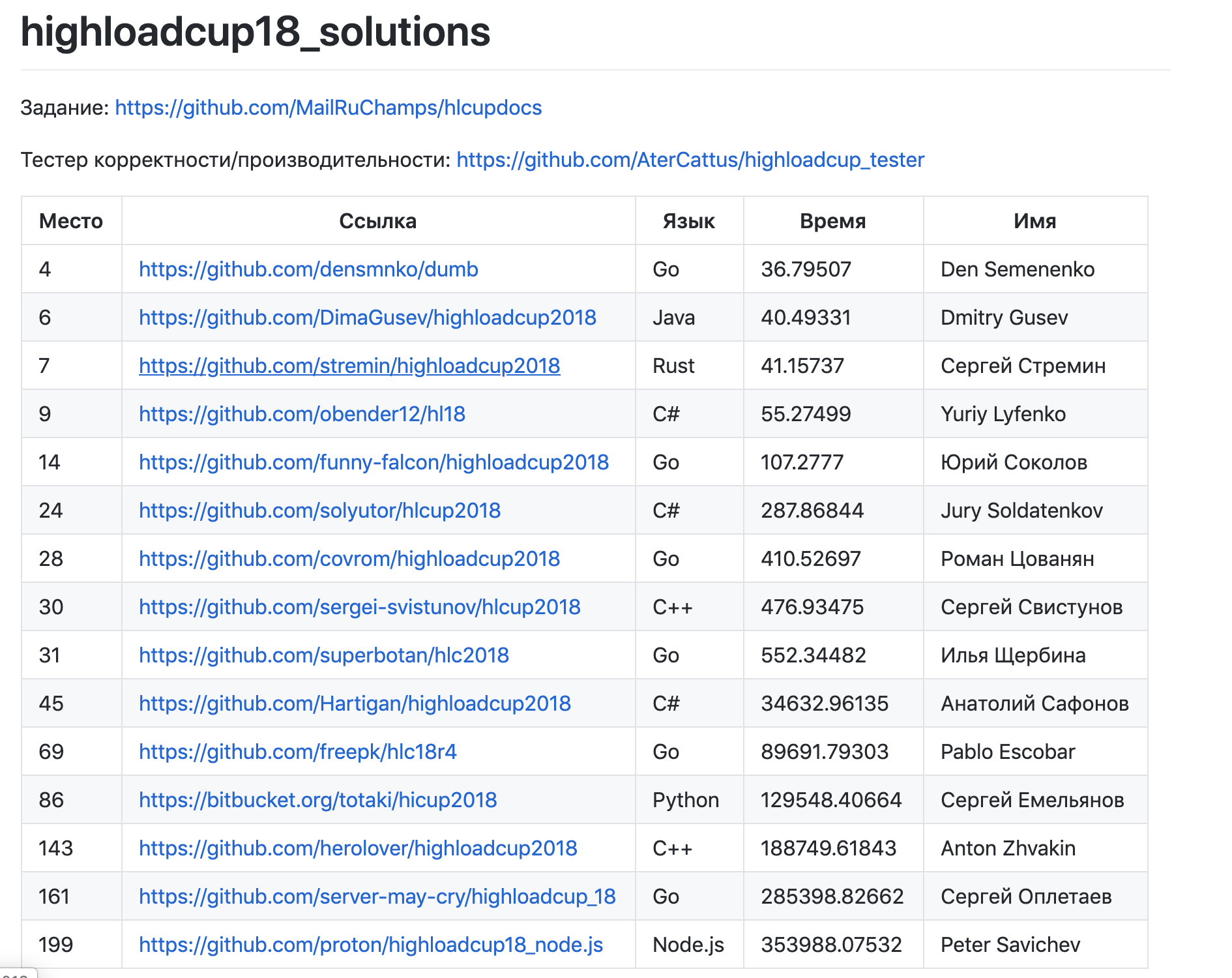

Other solutions: https://github.com/proton/highloadcup18_solutions

My Solution

Data types

The task is to provide results as fast as possible, so it’s good to have indexes for speeding up computation. But available memory is only 2GB, so I had to strike the balance between memory and compute.

First of all we need to store input data for all account. As the ids were consecuitive I just used std::vector<AccountData> with a few percent bigger than initial dataset to account for new inserts in phase 2.

We need to compress various strings commongly used in data: city, country, interests. The common technique is to use dictionary and encode those as integers of corresponding size (int16, int8 and int8 respectively) and use those integers everywhere instead. Templated class IdValueMap<IdType, ValueType> encapspulates that to create ids on demand: source code. Not only this saves on space it also improves performance as integer comparison is much faster then string based.

Filter API

This API was about returning a list of account data for using matching certain criteria.

The naive approach is to iterate backwards for all accounts (we need results in descending by id order) and check each account for match until we get required number of results.

To implement that I’ve introduced interface Filter with virtual method matches that each specific filter would implement:

struct Filter {

virtual bool matches(AccountId accountId, const AccountData& data) = 0;

};

There was quite a lot of boilerplate code, but fortunately it was fairly straightforward.

Obviously this doesn’t work well on sparse matches. But we can try some optimizations first and then fallback to naive approach if none of the optimizations triggered.

For some of the filter types we can precompute single-value index: map<Value, vector<AccountId>>:

// single value index

UsersAtIntIndex usersAtInterestId;

UsersAtIntIndex usersAtStatus;

UsersAtStringIndex usersAtCountry;

UsersAtStringIndex usersAtCity;

UsersAtStringIndex usersAtSex;

UsersAtStringIndex usersAtEmailDomain;

std::unordered_map<YearShort, AccountIdList> usersAtJoinedYear;

std::unordered_map<YearShort, AccountIdList> usersAtBirthYear;

On the Filter interface we can indicate this via API:

struct Filter {

virtual bool supportsLookup();

// Iterator is expected to go from biggest ids to smallest ones

// should only be called when supportsLookup == true

virtual std::unique_ptr<IdIterator> findRemainingItems();

virtual int32_t estimateOutputSize();

}

Then I iterated through all filters and selected the one with smallest estimateOutputSize() and rewrote the query to iterate through ids in findRemainingItems of that filter + a naive Filter::matches on remaining ones:

struct OptimizedFilter {

std::unique_ptr<Filter> lookupFilter;

std::vector<std::unique_ptr<Filter>> filters;

};

I didn’t bother doing a merge sort on lists from 2 filters that supported lookup, as it was already working good enough. But this was a potential future optimization.

For interests::contains I was using lookup by smallest field + a hack to not skip interests filter when doing naive match.

For likes::any I implemented the ability to combine 2 IdIterator-s into a single one by using virtual merge sort of lists. Then this allows to combine any number of iterators into a single one and use that for lookup.

Group API

This API is about running group by a combination of certain breakdowns and returning aggregated counts for each of the buckets. Possible breakdowns are

statussexinterestcountrycity

Additionally there might be a filtering stage for any of those breakdowns or by fields joined or birth.

As in filter task I started with naive brute-force approach and then was adding possible optimizations as needed. GroupAggregationItem represents aggregation bucket and then GroupAggregationMap = unordered_map<std::string, GroupAggregationItem> represents sparse result of aggregation. Then we can iterate through all accounts and compute this map. Obviously this worked ridiculously slow on 1M accounts so I had to use come up with something else.

First observation is that the input space is pretty small: we only need to store top50 top or bottom buckets for a query. So using naive approach we can precompute ahead of time combinations of breakdowns and just returned cached results.

For filtering fields we can pretend they could be breakdowns as well and throw them into breakdowns being precomputed. Overall I was pre-computing all 1,2,3 dimensional breakdowns assuming one of those could be fake joined or birth (both of them never appeared in query)

These are the following optimizations I am trying in order before switching to naive approach (which IIRC eventually wasn’t actually happening after I implemented all these optimizations):

tryFilterBreakdownIsCachedOptimizationtryNoFilterCachedOptimizationtryLookupOptimization

tryFilterBreakdownIsCachedOptimization - the idea behind this optimization is that if we have a query:

filter(C=c0) + GROUP(A, B)

we can re-write it to be

GROUP(A, B, C) + filter(C=c0) + DROP_COLUMN(C)

Which means that instead of filtering users and then grouping them, we would group by columns that include filtering field and then filter buckets based on that column value.

tryNoFilterCachedOptimization - this is the optimization when there are no filters, but the breakdown was precomputed. We can just return that.

tryLookupOptimization - this the remaining optimization. From the filters we can select the most sparse one (using helper functions from previous part filter API) then iterate through all users matching this condition and update aggregation map using naive approach.

Recommend API

In this API, for a given user we need to find all users with following properties:

- they are of opposite sex

- from a given country/city

- has at least 1 common interest with that user

- return

limitresults sorted by(premium, status, numInterests, ageDifference, accountId)tuples

I hashed tuple (premium, status, sex) into one single number bin and then precomputed RecommendIndex: bin -> interest -> vector<UseId>.

Then I used the following approach:

- Iterate through

bin-s in increasing order - For each interest of a given user collect users from this

(bin, interest)bucket - Filter out those that doesn’t match given country or cirty

- While doing this we can easily compute number of common interests

- Sort selected users from final criteria and append to results

- If the limit is not reached: repeat with next bucket

Suggest API

In this API, for a given user we need to return all users that liked similar users this one has. Similar to recommend API we need to filter out users outside of provided country or city.

For this API I precomputed reverse index of likes: for each user X store a list of userId that liked user X.

Then the approach becomes 2 nested loops:

for like in user.likes:

for liked_by in like.user.liked_by:

similarity(user, liked_by.user) += 1.0 / abs(like.ts - liked_by.ts)

Updates

During competition due to the lack of time I didn’t implement anything smart for updates: just validated input and returned early. This passed 100% requests of 2nd phase, then obviously the 3rd phase was wrong - it got around ~20% of correct answers.

Afterwards I spent some time implementing that. Basically, during update time I do only 2 things:

- update account data

- update cached group result

Then after 2nd phase I rebuild all the indexes - there is enough time to do that (~10s).

Do detect that I keep track of the last request time and if there was no requests for 1.2s then call into rebuilding indexes. Works good enough and finishes in less than 3s:

?| 1242.523s | [HttpHandler.h:368] #POSTS = 90000; No new requests after 1206 ms, rebuilding

?| 1242.523s | [IndexLoader.h:78] Mem = 1870.70 Mb | Rebuilding indexes

?| 1242.523s | [IndexLoader.h:266] Mem = 1870.70 Mb | Building single value indexes

?| 1243.235s | [IndexLoader.h:286] Mem = 1918.47 Mb | Sorting single value indexes

?| 1243.469s | [IndexLoader.h:114] Mem = 1918.47 Mb | Building recommend index

?| 1243.679s | [IndexLoader.h:93] Mem = 1916.86 Mb | Sorting account data fields

?| 1245.288s | [IndexLoader.h:82] Mem = 1916.86 Mb | Rebuilding indexes finished

?| 1245.288s | [HttpHandler.h:379] Rebuild finished in 2765 ms

Alternative to that would be to count number of requests, but that’s pretty fragile and if you loose just one request due to network failure - you are screwed.

Memory usage

During start up, all loaded indexes would take 1.6Gb of data. Here is the breakdown:

- Vector of

AccountDataallocated for all1.32Musers:383Mb - Populate accounts with data (likes, interests):

600Mb - Backwards likes index:

315Mb - Precomputed group results:

150Mb - Single value indexes:

50Mb - Recommend index:

7Mb

Important part here as the vectors are built - to preallocate a litte bit of extra capacity for the 2nd phase to avoid reallocation of vector capacity as it would grow 2x and eat extra memory.

It takes about ~3 minutes to load all data and pre-compute indexes. I didn’t optimize that part as 10 minutes provided in task description was more that enough. Here are the logs from index loading part:

// ?| 0.001s | [./IndexLoader.h:31] Mem = 0.99 Mb | Allocating 1320001 accounts

// ?| 0.210s | [./IndexLoader.h:341] Mem = 383.70 Mb | Loading accounts data

// ?| 80.572s | [./IndexLoader.h:283] Mem = 987.48 Mb | Computing like hint

// ?| 82.015s | [./IndexLoader.h:459] Mem = 987.49 Mb | Applying like size hint

// ?| 82.171s | [./IndexLoader.h:468] Mem = 993.37 Mb | Building backward likes index

// ?| 91.823s | [./IndexLoader.h:88] Mem = 1314.07 Mb | Building set of emails

// ?| 92.755s | [./IndexLoader.h:130] Mem = 1401.08 Mb | Precomputing group results

// ?| 92.755s | [./IndexLoader.h:214] Mem = 1401.11 Mb | Precomputing group results with 55 group lists

// ?| 96.986s | [./IndexLoader.h:232] Mem = 1401.21 Mb | Finished 1D

// ?| 121.640s | [./IndexLoader.h:232] Mem = 1407.98 Mb | Finished 2D

// ?| 183.270s | [./IndexLoader.h:237] Mem = 1549.90 Mb | Finished Group Results

// ?| 183.270s | [./IndexLoader.h:248] Mem = 1549.90 Mb | Building single value indexes

// ?| 183.658s | [./IndexLoader.h:268] Mem = 1603.36 Mb | Sorting single value indexes

// ?| 183.682s | [./IndexLoader.h:96] Mem = 1603.36 Mb | Building recommend index

// ?| 183.975s | [./IndexLoader.h:75] Mem = 1610.28 Mb | Sorting account data fields

// ?| 185.143s | [./IndexLoader.h:54] Mem = 1610.28 Mb | Index loading finished

Tools

I implemented a few tools in python: tank.py (to run requests againts canonical answers) and poster.py to run post requests. This was helpful in local iterations and narrowing down the wrong responses.

Here are the latest numbers from tank.py with all above mentioned optimizations:

==> finished 14869 requests

==> filter (7662) --> avg 4.2 ms, total = 32533.2

==> group (2970) --> avg 6.0 ms, total = 17819.3

==> recommend (2632) --> avg 5.7 ms, total = 14885.6

==> suggest (1605) --> avg 4.1 ms, total = 6536.5

total time = 71774.5 ms

Misc

C++ is a pretty barebone language for this type of task - standard library is lacking many primitives, so you either need to copy paste things around or try to integrate outside code (which sometimes might be non-trivial).

third-party libraries

I used the following third-party libraries:

- civetweb: http webserver implemenations

- nlohmann/json: json parsing

- jemalloc: general purpose memory allocator (malloc replacement)

They were pretty easy to start using, so that’s was mostly the deciding factor between what to use.

civetweb

This library was relatively easy to use. You need to inherit from base class and then override 2 methods: handleGet and handlePost:

class HttpHandler : public CivetHandler {

public:

bool handleGet(CivetServer* server, mg_connection* conn);

bool handlePost(CivetServer* server, mg_connection* conn);

}

Inside these methods you can call mg_get_request_info(conn) that would return a reqInfo structure with fields reqInfo->request_uri and reqInfo->query_string that could be used to route the request further to proper API endpoint.

nlohmann/json

This was the easiest to start using json library I found - the biggest advantage that it’s header-only which means you don’t need to mess up with build system. Accroding to benchmarks it wasn’t the fastest one, but still has pretty good performance. My plan was to get things going and achieve correctness first (it had highest penalty) and then optimize the biggest bottlenecks. Potentially with rewriting json parsing with hand-written optimization. Spoiler alert: I didn’t get to that.

jemalloc

This wasn’t strictly necessary to use, but it was easy enough so I just plugged it in.

Basically you need to make install jemalloc and then pass the flags to your compilation options:

JEMALLOC_OPTS ?= -L`jemalloc-config --libdir` -Wl,-rpath,`jemalloc-config --libdir` -ljemalloc `jemalloc-config --libs`

It actually helped a lot with memory fragmentation - I’ve seen a number of cases when during the first phase the memory would just grow and grow. Turns out that was due to poor memory reuse so application wasn’t able to reclaim it from OS.

Docker

I haven’t worked with docker before, so I had to spend some time setting it up. Initially I took gcc docker as base, but then struggled with installing needed external depdendencies (such as unzip or third-part libraries jemalloc). Then I switched to ubuntu image and just used regular ubuntu apt-get commands to install gcc and other tools.

Since I was developing on mac I wasn’t able to build locally and send the image, so ended up running build commands as a part of start script. Luckily there was enough time given before requests started to do that.